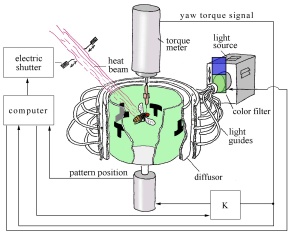

The following discussion is based on experiments described by Heisenberg (1991), Heisenberg, Wolf and Brembs (2001), and Brembs (1996, 2003), with the fruit fly Drosophila melanogaster at the flight simulator.

Readers are strongly advised to familiarise themselves with the experimental set-up, which is described in an Appendix, before going any further.

Innate preferences.

A biologically significant stimulus (the US) figures prominently in all forms of associative learning. The animal's attraction or aversion to this stimulus is taken as a given. In other words, associative learning is built upon a foundation of innate preferences. I shall argue that even animals that qualify as bona fide agents cannot act without having these preferences.

Action selection.

Prescott (2001, p. 1) defined action selection as the problem of "resolving conflicts between competing behavioural alternatives". A tethered fruit fly has four basic motor patterns that it can activate - in other words, four degrees of freedom. It can adjust its yaw torque, lift/thrust, abdominal position or leg posture (Heisenberg, Wolf and Brembs, 2001, p. 2). In the experiments described above, the fly was certainly engaging in action selection, when undergoing operant conditioning. The behavioural alternative (or motor pattern) selected by the fly was the one which enabled it to avoid the heat. The mechanism by which it did so was its ability to identify correlations between its behaviour and events in its surroundings:

As the fly initially has no clue as to which behavior the experimenter chooses for control of the arena movements, the animal has no choice but to activate its repertoire of motor outputs and to compare this sequence of activations to the dynamics of arena rotation until it finds a correlation (Heisenberg, Wolf and Brembs, 2001, p. 2).

It has been argued above (Conclusions A.11 and A.12) that "action selection" - even if centralised - does not, by itself, warrant a mentalistic interpretation, as it can be generated by fixed action patterns. Below, I shall defend the view that an animal which uses internal representations to fine-tune one of its motor patterns in order to attain a goal is a bona fide agent which believes that it can get what it wants by modifying its behaviour patterns.

Fine-tuning

It will be recalled that Abramson (1994) drew a distinction between instrumental behaviour (which he defined as "behavior defined by its consequences") and operant behaviour, in which an animal demonstrates that it can "operate some device - and know how to use it, that is, make an arbitrary response to obtain reinforcement" (1994, p. 151, italics mine). The term "arbitrary" was left undefined, although elsewhere (personal email, 2 February 2003) Abramson suggested that an animal capable of operant behaviour should be able to fine-tune its response in order to attain its goal.

Action selection cannot be regarded as agency properly speaking, unless it can be fine-tuned, or controlled. Prescott (2001, p. 12) explained the meandering behaviour of flatworms in terms of four general motor patterns, but as the worms did not appear capable of modifying these patterns, it was argued that they should not be called actions. Prescott (2001, p. 12) called them "reactive behaviors". The same comments apply to instrumental conditioning, as we saw above. An animal's acquisition of new patterns of behaviour caused by learned connections between As and Bs does not require it to be an agent; being a good correlator will do. And while I would agree with Heisenberg, Wolf and Brembs' contention that a fly placed in a rotating arena for the first time "is 'trying out' its entire behavioral repertoire" (2001, p. 2), I suggest that the ascription of trying to the fly would be unwarranted if it did nothing else to achieve its goal. It is the fly's ability to fine-tune its patterns of movement which warrants the ascription of agency to it. I shall endeavour to explain below why a mentalistic agent-centred intentional stance is the most appropriate stance for explaining this fine-tuning behaviour.

A tethered fruit fly has four basic motor patterns that it can activate. It can adjust its yaw torque, lift/thrust, abdominal position or leg posture (Heisenberg, Wolf and Brembs, 2001, p. 2). Each motor program can be implemented at different strengths or values. A fly's current yaw torque is always at a particular angle; its thrust is always at a certain intensity, and so on.

Definition of fine-tuning

We can define fine-tuning as the stabilisation of a basic motor pattern at a particular value or within a narrow range of values, in order to achieve a goal. In other words, fine-tuning is a refinement of action selection.

Does the operant behaviour of Brembs' fruit flies qualify as fine-tuning, as defined by Abramson? As Heisenberg (personal email, 6 October 2003) points out, in the simplest case (pure operant conditioning), all that the flies had to learn was: "Don't turn right." The range of permitted behaviour (flying anywhere in the left domain) is too broad for us to call this fine-tuning. However, in flight simulator (fs) mode, the flies had to stabilise a rotating arena by modulating their yaw torque, and they also had to stay within a safe zone to avoid the heat. In other experiments (Brembs, 2003), flies were able to adjust their thrust to an arbitrary level that stopped their arena from rotating. The ability of the flies to narrow their yaw torque range or their thrust to a specified range, in order to avoid heat, surely meets Abramson's requirements for fine-tuning. We can conclude that Drosophila is capable of true operant behaviour.

Grau (2002, p. 85), following Skinner, proposed a distinction between instrumental conditioning and operant learning, where "the brain can select from a range of response alternatives that are tuned to particular environmental situations and temporal-spatial relations" (italics mine). The terminology used here accords with the notions of action selection and fine-tuning, although the language is a little imprecise. It would be better, I suggest, to say that the brain first makes a selection from a range of "response alternatives" (motor patterns) and then fine-tunes it to a "particular environmental situation".

Internal representations and minimal maps

As we saw above, Dretske (1999, p. 10) proposed that in operant conditioning, an organism's internal representations are harnessed to control circuits "so that behaviour will occur in the external conditions on which its success depends". The idea that operant conditioning was mediated by representations suggested a promising mentalistic account of operant behaviour. However, Dretske's notion of control seemed too passive and his theory of representation too minimalistic to clearly differentiate instrumental from operant conditioning, or to justify a mentalistic interpretation of the latter.

Ramsey's notion that a typical belief is a map could serve to flesh out Dretske's account:

A belief of the primary sort is a map of neighbouring space by which we steer. It remains such a map however much we complicate it or fill in details (1990, p. 146).

I do not wish to claim that all or even most beliefs are map-like representations. I merely wish to propose that Ramsey's account of belief is a useful way of looking at some animal beliefs, including operant beliefs.

Before I go any further, I had better explain what I mean by a map. Stripped to its bare bones, a map must do three things: it must tell you where you are now, where you want to go and how to get to where you want to go - your current state, your goal and a suitable means for getting there. The phrase "where" in my definition need not refer to a place, but it must refer to a specific state - for instance, a specific color, temperature, size, angle, speed or intensity, or at least a relatively narrow range of values. A map need not be spatial, but it must be concrete.

The minimal map I am proposing here could also be described as an action schema. The term "action schema" is used rather loosely in the literature, but Perner (2003, p. 223, italics mine) offers a good working definition: "[action] schemata (motor representations) not only represent the external conditions but the goal of the action and the bodily movements to achieve that goal."

I am not proposing that Drosophila has a "cognitive map" representing its surroundings. In a cognitive map, "the geometrical relationships between defined points in space are preserved" (Giurfa and Capaldi, 1999, p. 237). As we shall see, the existence of such maps even in honeybees remains controversial. What I am suggesting is something more modest. First, Drosophila can form internal representations of its own bodily movements, for each of its four degrees of freedom, within its brain and nervous system.

Second, it can either (a) directly associate these bodily movements with good or bad consequences, or (b) associate its bodily movements with sensory stimuli (used for steering), which are in turn associated with good or bad consequences. In case (a), Drosophila uses an internal motor map; in case (b), it uses an internal sensorimotor map. In neither case need we suppose that it has a spatial grid map.

A map, or action schema, allows the fly to fine-tune the motor program it has selected. I propose that the existence of a map-like representation of an animal's current state, goal and pathway to its goal is what differentiates operant from merely instrumental conditioning.

For an internal motor map, the current state is simply the present value of the motor plan the fly has selected (e.g. the fly's present yaw torque), the goal is the value of the motor plan that enables it to escape the heat (e.g. the safe range of yaw torque values), while the means for getting there is the appropriate movement for bringing the current state closer to the goal. For an internal sensorimotor map, the current state is the present value of its motor plan, coupled with the present value of the sensory stimulus (color or pattern) that the fly is using to navigate; the goal is the color or pattern that is associated with "no-heat" (e.g. an inverted T); and the means for getting there is the manner in which it has to fly to keep the "no-heat" color or pattern in front of it.

I maintain that Drosophila employs an internal sensorimotor map when it is undergoing fs-mode learning. I suggest that Drosophila might use an internal motor map when it is undergoing pure operant conditioning (yaw torque learning). (I am more tentative about the second proposal, because as we have seen, in the case of yaw torque learning, Drosophila may not be engaging in fine-tuning at all, and hence may not need a map to steer by.) Drosophila may make use of both kinds of maps while flying in sw-mode, as it undergoes parallel operant conditioning.

An internal motor map, if it existed, would be the simplest kind of map, but may be possible to explain pure operant conditioning (yaw torque learning) without positing a map at all: the fly may be simply forming an association between a kind of movement (turning right) and heat (Heisenberg, personal email, 6 October 2003). An association, by itself, does not qualify as a map, because it does not include information about Drosophila's current state. Accordingly, what Brembs (2000) calls "pure operant conditioning" may turn out to be nothing more than instrumental conditioning. To rule out this possibility, one would have to show that Drosophila can fine-tune one of its motor patterns (e.g. its thrust) while undergoing pure operant conditioning.

Wolf's and Heisenberg's model of agency

My description of Drosophila's internal map draws heavily upon the conceptual framework for operant conditioning developed by Wolf and Heisenberg (1991, pp. 699-705). Their model includes: (i) a goal; (ii) a range of motor programs which are activated in order to achieve the goal; (iii) a comparison between the animal's motor output and its sensory stimuli, which indicate how far it is from its goal; (iv) when a temporal coincidence is found, a motor program is selected to modify the sensory input so the animal can move towards its goal. These four conditions describe operant behaviour. Finally, (v) if the animal consistently controls a sensory stimulus by selecting the same motor program, a more permanent change in its behaviour may result: operant conditioning.

The main difference between this model and my proposal is my emphasis on control: I propose that Drosophila not only selects a motor program (action selection), but also refines it through a fine-tuning process, and in so doing, exercises control over its bodily movements. In the fine-tuning process I describe, there is a continual inter-play between Drosophila's "feed-back" and "feed-forward" mechanisms.

Drosophila at the torque meter can adjust its yaw torque, lift/thrust, abdominal position or leg posture. I propose that the fly has an internal motor map or sensorimotor map corresponding to each of its four degrees of freedom, and that once it has selected a motor program, it can use the relevant map to steer itself away from the heat.

How might the fly form an internal representation of the present value of its motor plan? Wolf and Heisenberg (1991, p. 699) suggest that the fly maintains an "efference copy" of its motor program, in which "the nervous system informs itself about its own activity and about its own motor production" (Legrand, 2001). The concept of an efference copy was first mooted in 1950, when it was suggested that "motor commands must leave an image of themslves (efference copy) somewhere in the central nervous system" (Merfeld, 2001, p. 189). In an Appendix, I discuss a detailed model proposed by Merfeld (2001) of how the nervous system forms an internal representation of the dynamics of its sensory and motor systems.

Heisenberg (personal email, 15 October 2003) has recently summarised what we know about internal representations in Drosophila (see Appendix).

Actions and their consequences: the importance of associations and their relation to the motor map.

The foregoing discussion of how the fly represents its motor plan left unanswered the question of how the fly represents its goal and pathway (or means to reaching its goal) on its internal map. If the fly is using an internal motor map, the goal (escape from the heat) could be represented as a stored motor memory of the pattern (flying clockwise) which allows the fly to stay out of the heat, and the pathway as a stored motor memory of the movement (flying into the left domain) which allows the fly to get out of the heat. (I must stress that the existence of such motor memories in Drosophila is purely speculative; they are simply posited here to explain how an internal motor map could work, if it exists.) Alternatively, if the fly is using an internal sensorimotor map, the goal is encoded as a stored memory of a sensory stimulus (e.g. the inverted T) that the fly associates with the absence of heat, while the pathway is the stored memory of a sequence of sensory stimuli which allows the animal to steer itself towards its goal.

These representations presuppose that the fly can form associations between things as diverse as motor patterns, shapes and heat. Heisenberg, Wolf and Brembs (2001, p. 6) suggest that Drosophila possesses a multimodal memory, in which "colors and patterns are stored and combined with the good and bad of temperature values, noxious odors, or exafferent motion". In other words, the internal representation of the fly's motor commands has to be coupled with the ability to form and remember associations between different possible actions (yaw torque movements) and their consequences. Heisenberg explains why these associations matter:

A representation of a motor program in the brain makes little sense if it does not contain, in some sense, the possible consequences of this motor program (personal email, 15 October 2003).

On the hypothesis which I am defending here, the internal motor map (used in sw-mode and possibly in yaw torque learning) directly associates different yaw torque values with heat and comfort. The internal sensorimotor map, which the fly uses in fs-mode and sw-mode, indirectly associates different yaw torque values with good and bad consequences. For instance, different yaw torque values may be associated with the upright T-pattern (the CS) and inverted T-pattern, which are associated with heat (the US) and comfort respectively.

Sensory input also plays a key role: it informs the animal whether it has attained its goal, and if not, whether it is getting closer to achieving it. A fly undergoing operant conditioning in sw-mode or fs-mode needs to continually monitor its sensory input, so as to minimise its deviation from its goal (Wolf and Heisenberg, 1991; Brembs, 1996, p. 3). By contrast, a fly undergoing yaw torque learning has no sensory inputs that tell it if it is getting closer to its goal: it is flying blind, as it were.

The animal must also possess a correlation mechanism, allowing it to find a temporal coincidence between its motor behaviour and the attainment of its goal. Once it finds a temporal correlation between its behaviour and its proximity to the goal, "the respective motor program is used to modify the sensory input in the direction toward the goal" (Wolf and Heisenberg, 1991, p. 699; Brembs, 1996, p. 3). In the case of the fly undergoing flight simulator training, finding a correlation would allow it to change its field of vision, so that it can keep the inverted T in its line of sight.

Exactly where the fly's internal map is located remains an open question: Heisenberg, Wolf and Brembs (2001, p. 9) acknowledge that we know very little about how flexible behaviour in Drosophila is implemented at the neural level. Recently, Heisenberg (personal email, 15 October 2003) has proposed that the motor program for turning in flight is stored in the ventral ganglion of the thorax, while the multimodal memory (which allows the fly to steer itself and form associations between actions and consequences) is stored in the insect's brain, at a higher level. The location of the map may be distributed over the fly's nervous system.

Self-correction.

Legrand (2001) proposes that the "efference copy" of an animal's motor program also gives it a sense of trying to do something, and indicates the necessity of a correction. This brings us to Beisecker's (1999) proposal that animals that are capable of operant conditioning will revise their mistaken expectations and try to correct their mistakes. We can now address the question raised earlier: what sort of behaviour counts as self-correction?

Heisenberg, Wolf and Brembs (2001, p. 3) contend that operant behaviour can be explained by the following rule: "Continue a behaviour that reduces, and abandon a behaviour that increases, the deviation from the desired state." The abandonment referred to here could be called self-correction, if it takes place within the context of operant conditioning.

An additional kind of self-correction occurs when an animal continually updates its multimodal associative memory as new information comes to light and as circumstances change (e.g. if the inverted T comes to be associated with heat rather than the absence of it).

Control: what it is and what it is not.

I wish to make it clear that I am not proposing that Drosophila consciously attends to its internal motor (or sensorimotor) map when it adjusts its angle of flight at the torque meter. To do that, it would have to not only possess awareness, but also be aware of its own internal states. In any case, the map is not something separate from the fly, which it can consult: rather, it is instantiated within the fly's body. Instead of picturing the fly as looking at its internal motor map, we should try to imagine it feeling its way around the map, while observing its environment, rather than the map itself. That is, the fly's efference copy enables it to monitor its own bodily movements, and it receives sensory feedback (via the visual display and the heat beam) when it manipulates its bodily movements.

On the other hand, we should not picture the fly as an automaton, passively following some internal program which tells it how to navigate. There would be no room for agency or control in such a picture. Rather, controlled behaviour originates from within the animal, instead of being triggered from without (as reflexes are, for instance). It is efferent, not afferent: the animal's nervous system sends out impulses to a bodily organ (Legrand, 2001). Additionally, the animal takes the initiative when it subsequently compares the output from the nervous system with its sensory input, until it finds a positive correlation (Wolf and Heisenberg, 1991, p. 699; Brembs, 1996, p. 3).

It will be seen that my account of control owes much to Wolf and Heisenberg (1991, quoted in Brembs, 1996, p. 3, italics mine) who define operant behaviour as "the active choice of one out of several output channels in order to minimize the deviations of the current situation from a desired situation", and operant conditioning as a more permanent behavioural change arising from "consistent control of a sensory stimulus."

Acts of control should be regarded as primitive acts: they are not performed by doing something else that is even more fundamental. However, the term "by" also has an adverbial meaning: it can describe the manner in which an act is performed. If we say that a fly controls its movements by following its internal map, this does not mean that map-following is a lower level act, but that the fly uses a map when exercising control. The same goes for the statement that the fly controls its movements by paying attention to the heat, and/or the color or patterns on the rotating arena.

Why an agent-centred intentional stance is required to explain operant conditioning

We can now explain why an agent-centred mentalistic account of operant conditioning is to be preferred to a goal-centred intentional stance. The former focuses on what the agent is trying to do, while the latter focuses on the goals of the action being described. A goal-centred stance has two components: an animal's goal and the information it has which helps it attain its goal. The animal is not an agent here: its goal-seeking behaviour is triggered by the information it receives from its environment.

By contrast, our account of operant conditioning includes not only information (about the animal's present state and end state) and a goal (or end state), but also an internal representation of the means by which the animal can attain its goal: by fine-tuning its generalised motor patterns - in other words, by performing actions, which require an agent with a mind of its own.

But why should a fine-tuned movement be called an action, and not a reaction? The reason is that fine-tuned movement is self-generated: it originates from within the animal's nervous system, instead of being triggered from without. Additionally, the animal takes the initiative when it looks for a correlation between its fine motor outputs with its sensory inputs, until it manages to attain the desired state. The goal-centred stance cannot predict the occurrence of fine-tuning behaviour, because it involves a two-way interplay between the agent adjusting its motor output and the new sensory information it receives from its environment.

The centrality of control in our account allows us to counter Gould's objection (2002, p. 41) that associative learning is too innate a process to qualify as cognition. In the case of instrumental conditioning, an organism forms an association between its own motor behaviour and a stimulus in its environment. Here the organism's learning content is indeed determined by its environment, while the manner in which it learns is determined innately by its neural hard-wiring. By contrast, in operant conditioning, both the content and manner of learning are determined by a complex interplay between the organism and its environment, as the organism monitors and continually controls the strength of its motor patterns in response to changes in its environment. One could argue that an organism's ability to construct its internal representations to control own its movements enables it to "step outside the bounds of the innate", as a truly cognitive organism should be able to do (Gould, 2002, p. 41).

A model of operant agency

We can now formulate a set of sufficient conditions for operant agency:

(i) innate preferences or drives;

(ii) motor programs, which are stored in the brain, and generate the suite of the animal's motor output;

(iii) an action selection mechanism, which allows the animal to make a selection from its suite of possible motor response patterns and pick the one that is the most appropriate to its current circumstances;

(iv) fine-tuning behaviour: efferent motor commands which are capable of stabilising a motor pattern at a particular value or within a narrow range of values, in order to achieve a goal;

(v) the ability to store and compare internal representations of its current motor output (i.e. its efferent copy, which represents its current "position" on its internal map) and its afferent sensory inputs;

(vi) direct or indirect associations between different motor commands, sensory inputs (optional) and their consequences, which are stored in the animal's memory and updated when circumstances change;

(vii) a goal or end-state, which is internally encoded as a stored memory of a motor pattern or sensory stimulus that the fly associates with attaining its goal;

(viii) a pathway for reaching its goal, which is internally encoded as a stored memory of a sequence of movements or sensory stimuli which allows the animal to steer itself towards its goal;

(ix) sensory inputs that inform the animal whether it has attained its goal, and if not, whether it is getting closer to achieving it (the latter part is optional);

(x) a correlation mechanism, allowing it to find a temporal coincidence between its motor behaviour and the attainment of its goal;

(xi) self-correction: abandonment of behaviour that increases, and continuation of behaviour that reduces, the animal's deviation from its desired state.

Definition - "operant conditioning"

An animal can be described as undergoing operant conditioning if the following features can be identified:

Carruthers' argument against inferring mental states from conditioning

We can now respond to Carruthers' (2004) sceptical argument against the possibility of attributing minds to animals, solely on the basis of what they have learned through conditioning:

...engaging in a suite of innately coded action patterns isn't enough to count as having a mind, even if the detailed performance of those patterns is guided by perceptual information. And nor, surely, is the situation any different if the action patterns aren't innate ones, but are, rather, acquired habits, learned through some form of conditioning.

Carruthers argues that the presence of a mind is determined by the animal's cognitive architecture: in particular, whether it has beliefs and desires. I agree with Carruthers that neither the existence of flexible action patterns in an animal nor its ability to undergo conditioning warrants our ascription of mental states to it (Conclusion F.5 and Conclusion L.12). However, we should not assume that there is a single core cognitive architecture underlying all minds. The cognitive architecture presupposed by operant conditioning may be very different from that supporting other simple forms of mentalistic behaviour. The critical question is: does operant conditioning require us to posit beliefs and desires?

The role of belief and desire in operant conditioning.

In the operant conditioning experiments performed on Drosophila, we can say that the fly desires to attain its goal. The content of the fly's belief is that it will attain its goal by adjusting its motor output and/or steering towards the sensory stimulus it associates with the goal. For instance, the fly desires to avoid the heat, and believes that by staying in a certain zone, it can do so.

Why do we need to invoke beliefs and desires here? I have argued above that the existence of map-like representations which encode an animal's current status, goal and means of attaining it, and which underlie the two-way interaction between the animal's self-generated motor output and its sensory inputs during operant conditioning, require us to adopt an agent-centred intentional stance. But it is generally understood that an individual cannot be an agent unless it has beliefs and desires. In the case of operant conditioning, the map-like representations described above should be considered as the agent's beliefs, and the goal should be regarded as its object of desire.

What I am proposing is that an animal's means-end representation formed by a process under the control of the agent deserves to be called a belief. By "control" I mean motor output from the brain and/or nervous system. During operant conditioning, the animal constructs its internal map by fine-tuning its motor movements. Organisms that are incapable of operant conditioning lack this control: protoctista, for instance, can sense the direction of their food by comparing inputs from both sides of their bodies, but their motor movements do not govern the construction of their "representations". Control also requires a bona fide individual: non-living things, lacking a telos, are ineligible for having beliefs.

The scientific adbantage of a mentalistic account of operant conditioning

What, it may be asked, is the scientific advantage of explaining a fly's behaviour in terms of its beliefs and desires? If we think of the animal as an individual, probing its environment and fine-tuning its movements to attain its ends, we can formulate and answer questions such as:

-

What sorts of events will the animal form operant beliefs about, and what sort of events will it ignore?

What kinds of means can it encode as pathways to its ends?

Why is it slower to acquire beliefs in some situations than in others?

How does it go about revising its beliefs, as circumstances change?

Criticisng the belief-desire account of agency

The belief-desire account of agency is not without its critics. Bittner (2001) has recently argued that neither belief nor desire can explain why we act. A belief may convince me that something is true, but then how can it also steer me into action? A desire can set a goal for me, but this by itself cannot move me to take action. (And if it did, surely it would also steer me.) Even the combination of belief and desire does not constitute a reason for action. Bittner does not deny that we act for reasons, which he envisages as historical explanations, but he denies that internal states can serve as reasons for action.

While I sympathise with Bittner's objections, I do not think they are fatal to an agent-centred intentional stance. The notion of an internal map answers Bittner's argument that belief cannot convince me and steer me at the same time. As I fine-tune my bodily movements in pursuit of my object, the sensory feedback I receive from my probing actions shapes my beliefs (strengthening my conviction that I am on the right track) and at the same time steers me towards my object. A striking feature of my account is that it makes control prior to the acquisition of belief: the agent manages to control its own body movements, and in so doing, acquires the belief that moving in a particular way will get it what it wants.

Nevertheless, Bittner does have a valid point: the impulse to act cannot come from belief. In the account of agency proposed above, the existence of the goal, basic motor patterns and an action selection mechanism - all of which can be explained in terms of a goal-centred intentional stance - were simply assumed. This suggests that operant agency is built upon a scaffolding of innate preferences and motor patterns. These are what initially moves us towards our object.

Bittner's argument against the efficacy of desire fails to distinguish between desire for the end (which is typically an innate drive, and may be automatically triggered whenever the end is sensed) and desire for the means to it (which presupposes the existence of certain beliefs about how to achieve the end). The former not only includes the goal (or end), but also moves the animal, through innate drives. In a similar vein, Aristotle characterised locomotion as "movement started by the object of desire" (De Anima 3.10, 433a16). However, desire of the the latter kind presupposes the occurrence of certain beliefs in the animal. An object X, when sensed, may give rise to X-seeking behaviour in an organism with a drive to pursue X. This account does not exclude desire, for there is no reason why an innate preference for X cannot also be a desire for X, if it is accompanied by an internal map. Desire, then, may move an animal. However, the existence of an internal map can only be recognised when an animal has to fine-tune its motor patterns to attain its goal (X) - in other words, when the attainment of the goal is not straightforward.

Davidson on animal beliefs

Another argument that can be made against the possibility of belief and desire in animals, first formulated by Davidson (1975), is discussed and rejected by Carruthers (2004). First, it is claimed that beliefs and desires have a content which can be expressed in a sentence. To believe is to believe that S, where S is a sentence. The same goes for desire. Second, it is always absurd to attribute belief or desire that S to an animal, because nothing in its behaviour could ever warrant our ascription to it of beliefs and desires whose content we can specify in a sentence containing our concepts. The animal is simply incapable of the relevant linguistic behaviour. It follows that the ascription of beliefs and desires to animals is never justified.

Carruthers questions the first assumption. What it says is that in order for an animal to be thinking a thought, it must be possible to formulate the content of that thought in a that-clause, so that we (humans) can think the same thought, from the inside, with our concepts. This, says Carruthers, is tantamount to imposing a co-thinking constraint on genuine thoughts. Carruthers argues that there may be many thoughts that we are incapable of entertaining. An animal's concepts may be too alien for us to co-think them with the animal in the content of a that-clause.

Carruthers suggests there is an alternative way of characterising thoughts from the outside, using indirect descriptions. To borrow his example, even though we do not know what an ape's concept of a termite is, "we do know that she believes of the termites in that mound that they are there, and we know that she wants to eat them" (2004). Additionally, carefully planned experiments can help us to map the boundaries of the animal's concepts, and describe them from the outside.

Conclusions regarding operant agency

Operant conditioning is a form of learning which presupposes an agent-centred intentional stance.

DF.1 Animals that are capable of undergoing operant conditioning are bona fide agents that possess beliefs and desires.

Does operant conditioning require a first-person account?

Despite our attribution of agency, belief and desires to animals, the fact that we have characterised the difference between animals with and without beliefs in terms of internal map-like representations may suggest that a third-person account is still adequate to explain operant conditioning. Representations, after all, seem to be the sort of things that can be described from the outside.

Such an interpretation is mistaken, because it overlooks the dynamic features of the map-like representations which feature in operant beliefs. To begin with, there is a continual two-way interplay between the animal's motor output and its sensory input. Because the animal maintains an "efference copy" of its motor program, it is able to make an "inside-outside" comparison. That is, it can compare the sensory inputs arising from motor movements that are controlled from within, with the sensory feedback it gets from its external environment. To do this, it has to carefully monitor, or pay attention to, its sensory inputs. It has to be able to detect both matches (correlations between its movements and the attainment of its goal) mismatches or deviations - first, in order to approach its goal, and second, in order to keep track of it. The notions of "control' and "attention" employed here highlight the vital importance of the "inside-outside" distinction. In operant conditioning, the animal gets what it wants precisely because it can differentiate between internal and external events, monitor both, and use the former to manipulate the latter.

In short: to attempt to characterise operant conditioning from the outside is to ignore how it works. Some sort of first-person account is required here.

By contrast, in instrumental conditioning, the animal obtains its goal simply by associating an event which happens to be internal (a general motor pattern in its repertoire) and an external event. No controlled fine-tuning is required. Here, it is not the internal property of the motor pattern that explains the animal's achieving its goal, but the fact that the animal can detect temporal correlations between the motor behaviour and the response.

Back to Chapter 2 Previous page - Synthesis Next page - Spatial navigation*** SUMMARY of conclusions reached References